A Guilty Voice: Is Voice Analysis Junk Science or Reliable Evidence?

by Clarence Walker Jr.

Law enforcement officials believe our vocal cords betray us with every syllable we speak. Welcome to a world of technology capable of listening to a human voice and then determining whether a person is concealing the truth. Amidst a sea of clever deceptions and unintended revelations, Computer Voice Stress Analyzer (“CVSA”) emerges as a powerful tool for decoding the subtle nuances of human speech.

This fascinating field of study has captured the attention of law enforcement agencies, intelligence communities, and researchers alike, offering a window into the complex interplay between our minds and voices.

CVSA software applications were designed to analyze a person’s voice patterns to detect deceptive responses. These programs analyze variations in vocal patterns and visually represent them on a graph to determine if a person is displaying signs of “deception” or “truthfulness.”

Generally, developers of CVSA technology do not say that these devices can ferret out lies. Instead, they emphasize that voice stress analyzers are designed to pick up on microtremors resulting from stress associated with a person attempting to mask or mislead. CVSA proponents often compare the technology to polygraph testing, which attempts to measure changes in respiration, heart rate, and galvanic skin response.

Polygraph Examiners Question the Accuracy

Even advocates of polygraph testing, however, acknowledge CVSA limitations, including the fact that the practice is inadmissible as evidence in a court of law; requires a large investment of resources; and takes several hours to perform, with the subject connected to a machine. Furthermore, a polygraph cannot test audio or video recordings, or statements made either over a telephone or in a remote setting (that is, away from a formal interrogation room), such as at an airport ticket counter. Such limitations of the polygraph—along with technological advances—prompted the development of CVSA software.

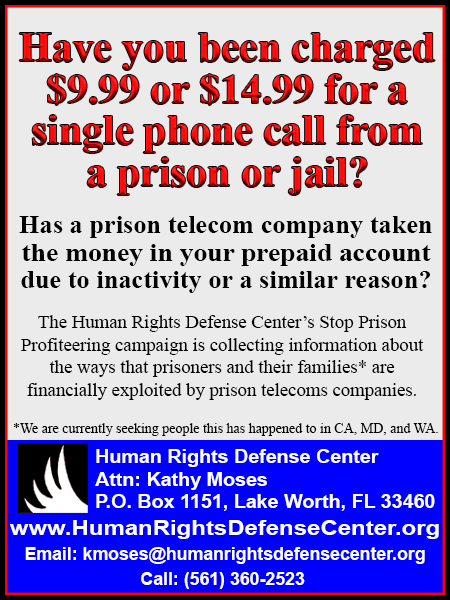

Although the CVSA deception technique of analyzing subtle voice tremors lacks recognized scientific merit, it has been utilized nationwide by law enforcement agencies and prison systems. For example, in 2017, Raymond Whitall, a then-58-year-old prisoner at Salinas Valley State Prison in Monterey County, California, sought to use this controversial technology in his defense. Whitall accused prison guards of severely beating him as he lay helpless on the gym floor.

According to the guards involved, Whitall, who suffered from an auditory and balance-related chronic condition, had allegedly struck one of them with his cane, prompting three others to intervene. An internal prison investigation, however, revealed inconsistencies between the guards’ version and the nature of Whitall’s injuries.

Whitall agreed to undergo the CVSA to determine the veracity of his serious allegations against the guards. This device, as stated, would attempt to detect imperceptible fluctuations in Whitall’s voice to aid prison staff in assessing his truthfulness. However, Whitall was unaware of the device’s ineffectiveness as a lie detector, as numerous studies and even its creator have discredited its accuracy—comparing it to a random chance, like a coin flip.

After an examiner observed signals in Whitall’s vocal intonation that hinted at deceit, the prison officials, in a shocking turn of events, dismissed his grievance. A recent inquiry by The San Francisco Chronicle reveals that the California Department of Corrections and Rehabilitation (“CDCR”) employed questionable technology to evaluate the trustworthiness of prisoners over the years. Despite extensive analysis of the Computer Voice Stress Analyzer and National Institute for Truth Verification (“NITV”) Federal Services’ acknowledgment of its limited ability to detect lies, the CDCR continued to depend on the system to undermine prisoners’ complaints against prison staff.

NITV Courses

The private company NITV Federal Services offers dozens of courses to law enforcement agencies each year and has sold its machines and training sessions to thousands of departments, billing the CVSA as a cheaper alternative to the polygraph test—another controversial lie-detection technology. Collectively, California agencies have spent at least hundreds of thousands of taxpayer dollars on the CVSA since 2020, the Chronicle found.

Mary Xjimenez, a spokesperson for the CDCR, had not responded to reporters’ email inquiries about Whitall’s case by the time the Chronicle published its article. She later stated, “CVSA has not been used by CDCR to discredit an employee or incarcerated person.”

Finally, after months of persistent questioning from Chronicle reporters, Xjimenez confirmed that the agency is decisively moving forward with regulations to eliminate the use of CVSA across all offices and institutions.

Berkeley Director of Police Accountability, Hansel Alejandro Aguilar, who provides oversight of the city’s police department, said in an email that, “Given the concerns about CVSA’s scientific validity the tool should be re-evaluated to ensure that it meets rigorous standards of accuracy and effectiveness.” He added that his office would recommend a review of the police department’s policy “if warranted.”

The Chronicle’s investigation, based on documents and interviews, shows that law enforcement agencies continued to use the CVSA despite years of concerns regarding its effectiveness and fairness. These concerns included lawsuits and letters from a prominent civil rights law firm addressed to prison officials.

911 Calls

In recent years, law enforcement’s use of scientific-sounding technologies that are unsupported by scientific research has come under close scrutiny. Judges and attorneys have asked federal officials to ban the use of 911 call analysis, an unverified system that uses a person’s speech patterns to determine whether they committed the crime they’re calling the police about.

A recent federal study found that blood spatter analysis, a method of using bloodstain patterns to determine how a crime unfolded, regularly produces “erroneous” findings, with analysts often reaching opposing conclusions when examining the same bloodstains.

Lawsuit

Whitall, now 65, is suing Salinas Valley, where he remains incarcerated, as well as the guards he accused of beating him up. According to Whitall, he spent four months in solitary confinement, while prison staff faced no consequences. His civil trial was scheduled to begin in August 2024.

“Nobody got punished,” Whitall told the Chronicle. “They said I did what I did, and that the officers were right, and that’s it. But I believe some justice will be done pretty soon.”

Whitall had accused guards of beating him without justification, but after he took a CVSA test, the prison said his results deemed him “deceptive” and threw out his complaint.

Appellate Court Upholds Conviction in CVSA Case

Defense attorneys caution that the primary rule of thumb in the legal arena is that suspects in criminal investigations should not talk to the police or submit to testing, including polygraph tests. However, many attorneys don’t explain the CVSA test to their clients.

There are several documented cases where a person took the CVSA test, and then, police told them they failed the test. Suspects should be aware that if they confess as a result of taking the CVSA—despite its questionable ability to determine if someone is telling the truth or not and, even if police lie about the person failing the test—an appeals court will still likely allow their confession and uphold their conviction.

In a case decided on June 7, 2007, the New Jersey Superior Court, Appellate Division, confirmed that the admission of a confession obtained utilizing the CVSA by the trial judge did not constitute error. Attorneys for the appellant had argued that, among other things, the subject had “succumbed to a truth verification examination,” a test not shown to be scientifically reliable, which the police used to “overpower (him) by telling him that the test administered showed that he was untruthful.” This was the only reason for his confession.

The Court stated, “The CVSA examination was used solely as an investigative tool by the police and the State made no attempt to admit the results at trial. Defendant signed a consent form to take the CVSA examination and acknowledged that he understood the form. Defendant was asked if he had any questions about the examination and he stated that he did not.”

The Court explained: “Additionally, the results were not fabricated. Instead, they were administered by a trained and certified examiner [Det. Killane, Perth Amboy P.D.], who compared his conclusions with those of two other trained and certified examiners. Thus, under the totality of circumstances the defendant’s decision to make the confessions following the CVSA examination was voluntary and not coerced. Based on our review of the entire record, we perceive no sound basis to disturb the judge’s decision to admit the defendant’s confessions.”

How CVSA Works

The CVSA technology functions by detecting subtle variations in an individual’s speech patterns that are imperceptible to the human ear. As detailed in a training manual from the early 2000s, people’s vocal cords are known to exhibit physiological tremors that tend to decrease when they experience stress.

Consequently, when a person is under stress, the frequency and shape of their speech patterns as represented on a graph are expected to differ from those of a non-stressed individual.

Once used by United States military officials to interrogate terrorism suspects, the CVSA was abandoned by the Pentagon in the mid-2000s over concerns about its reliability, according to a 2006 ABC News investigation.

Numerous reports, including a 2008 study funded by the National Institute of Justice, the research arm of the U.S. Justice Department, have concluded that the tool is no better than chance at detecting lies. In 2007, speech sound experts in Sweden observed that no studies have confirmed the human voice produces the microtremors that the CVSA supposedly measures.

CVSA results are generally not admissible in court, according to several federal rulings. Starting in 1923, the U.S. Supreme Court has ruled that lie-detector exams and other experimental investigative tools must be backed by “demonstrable” scientific evidence and have “gained general acceptance” before their findings can be introduced as evidence. In a 1976 decision by a Maryland appeals court, judges ruled that a Psychological Stress Evaluation, an early iteration of a voice-stress test, was not admissible. “A lie detector test by any other name is still a lie detector test,” the Court declared. Smith v. State, 31 Md.App. 106 (1976).

In 2002, the technology’s parent company, NITV Federal Services, acknowledged in a court filing related to a San Diego homicide case that the CVSA was ineffective at separating fact from fiction. Three men had alleged police used the tool to interrogate them for hours until they falsely confessed to murdering a 12-year-old girl.

ABC News reported that Michael Crowe’s 12-year-old sister, Stephanie, was found stabbed to death in her bedroom. Suspicious about Crowe, the Escondido, Calif., police investigators brought him into the station for questioning and hooked him up to the CVSA in the middle of the night.

Based on tapes recorded during his questioning, Crowe answered “yes” when the detective asked, “Is today Thursday?” But when Crowe replied “no” after a detective asked whether he took Stephanie’s life, the detective told him that he had failed the test.

“I started to think that, you know, maybe the machine’s right, especially when they added on top of it that the machine was getting my subconscious feelings on it, that I could be lying and not even know it,” Crowe, now 21, told the news magazine Primetime. “They said the machine is more accurate than the polygraph and is the best device for telling the truth, for finding the truth,” which were blatant lies.

Once the detective told him that he had failed the test, Crowe said he began to doubt his own memory and wonder whether he might have killed his sister. “I didn’t want to go to prison, and I just wanted to be out of that room,” Crowe recalled. “So my only option was to say, ‘Yeah, I guess I did it,’ and then hope for the best.”

National Institute for Truth Verification

In his sworn declaration, David Hughes, executive director of the company then known as the National Institute for Truth Verification, wrote, “The CVSA is not capable of lie detection.” He continued, “NITV cautions all examiners and purchasers of the product that the CVSA should only be used as an investigative device” to measure what he called “brain stress activity.”

Reached for comment last March, NITV Federal Services claimed the science behind the tool was sound, and that research debunking it was part of a broad effort to discredit the CVSA by the polygraph industry.

“It seems quite surprising, actually silly, that for an instrument that is no better than chance, over 3,000 accredited law enforcement agencies now depend on the CVSA for their truth verification needs,” a company spokesperson told the Chronicle. “Why are they so afraid of it? It’s just another investigative tool to help guide investigations.”

The company declined to provide a list of its clients in response to a request from the Chronicle. However, according to information available on the company’s website, NITV reports that over 2,700 law enforcement agencies nationwide utilize the CVSA—with 270 of them located in California. Additionally, the company discloses that nearly 400 other agencies, such as fire departments, correctional facilities, and airlines, are users of its technology. NITV also mentions that governmental officials in New Zealand, Nigeria, and Pakistan have adopted the tool for their operations.

Xjimenez said the state prison agency had significantly narrowed its use of the CVSA in recent years and no longer employs it to assess allegations of guard abuse. Still, the department has declined to revisit past complaints like Whitall’s and has continued to use the CVSA to “assist in determining an incarcerated person’s truthfulness” during a threat assessment, an investigation triggered by a possible threat made against prison staff, according to Xjimenez.

At Least 26 California Police Departments Utilize CVSA in Hiring Process

The Chronicle identified 26 California law enforcement agencies that referenced using the CVSA in job listings or in other materials. Five of the agencies did not respond to a reporter’s repeated interview requests. Seven said they no longer use the tool or had never used it.

Ten agencies said they use the CVSA only to interview prospective officers as part of the hiring process. Law enforcement agencies that operate in the Bay Area, including the Berkeley Police Department, the Alameda County Sheriff’s Office and the California Highway Patrol, confirmed they use CVSA. But when the Chronicle asked for additional details about their use of it, they did not elaborate.

The California Department of Justice’s law enforcement division uses the CVSA “for hiring purposes during the background process for Special Agent candidates,” a spokesperson for the Attorney General’s Office said. “The CVSA is not used during investigations.”

A spokesperson for the Tulare County Probation Department said, “We have not seen any recent research on the accuracy of the CVSA.” She added that results from CVSA exams are “never used to approve or disqualify a candidate.”

A representative for the California State Parks Department also said it has never used “deceptive” findings to eliminate a peace officer candidate from its job pool. The Vacaville Police Department also uses the CVSA when hiring officers. Asked about studies debunking CVSA, Sgt. Robert Myers, a detective for the police department, said: “I’m aware.” He continued: “There are experts for both sides, for and against it.”

Two agencies—the Kings County District Attorney’s Office and the Stanislaus County Sheriff’s Office—said they use the technology for criminal investigations, though when asked by reporters for specific cases and details, neither agency provided them. A third, the Fresno Police Department, said its investigators have used the device during criminal investigations in the past but could not recall a time its officers had done so in at least the past two years.

The Monterey County Sheriff’s Office hosted a CVSA training course in 2024, according to NITV, and the department said it plans to implement the technology in the future. The agency did not respond to a reporter’s repeated requests for more information. Criminal psychology experts caution that any use of the CVSA is inadvisable because the tool is not only ineffective and inadmissible in court but could be used to pressure prisoners into making false confessions.

“It has no grounding in science whatsoever,” declared Maria Hartwig, a professor at John Jay College of Criminal Justice in New York City and an expert on the psychology of deception. “It’s pure bulls---, in the technological and philosophical sense.” Hartwig called the prison department’s use of the CVSA “entirely inconsistent with a commitment to actual fact-finding.”

Richard Leo, a University of San Francisco professor of law and psychology and expert on false confessions, called law enforcement use of CVSA “professional malpractice.”

“It’s like saying a Ouija board or an astrological chart is an investigative tool,” said Leo, who wrote about the CVSA in his book, “Police Interrogation and American Justice.” Leo said he “praised” the state prison system for abandoning the technology.

‘Pseudoscience’

The website for NITV Federal Services links to 10 studies or “study summaries” that the company claims “underpin the scientific validity” of the CVSA. But the majority of these studies are either decades old, don’t directly test the CVSA’s reliability as a lie detector, or have not undergone peer review—an essential part of the scientific process, which allows experts to ferret out poor quality research.

The website references a 2012 study billed as the “first peer-reviewed” study on the device. It purports to verify the effectiveness of CVSA, but it was co-authored by James Chapman, then the director of the National Association of Computer Voice Stress Analysts, an arm of NITV.

NITV claimed the study was published in a journal called Criminalistics and Court Expertise. While there is no English-language journal by that name, the study’s other co-author, Marigo Stathis, provided the Chronicle with a link to an obscure journal published by Ukraine’s Ministry of Justice with a title that translates to “Criminalistics and Forensics.” Stathis also sent a PDF copy of the edition that published their study. At the time, the journal’s title translated to “Criminology and Court Expertise.” NITV Federal Services did not respond to Chronicle reporters’ questions about these studies or the journal.

The California Commission on Peace Officer Standards and Training, or POST, a government agency that sets statewide training and standards for law enforcement, says on its website that police officer candidates may be subject to voice stress analysis to verify the “truthfulness of information” during the hiring process.

Katie Strickland, a spokesperson for POST, said the organization has no authority to encourage or dissuade law enforcement agencies from using the CVSA. “The hiring authority and discretion falls to the individual agencies,” Strickland said.

Attorney Harry Stern, who frequently represents police officers, said none of his clients have lost employment opportunities over a failed CVSA exam. He said that, in his experience, most law enforcement agencies use polygraphs as part of the hiring process. “In a general sense, I am wary of any technology that isn’t admissible in court,” Stern said. “There is a reason for that: It hasn’t passed objective scientific muster.”

On law enforcement Reddit forums, prospective police officers trade advice on how to pass voice stress background checks, while venting about the technology. One poster called it “old pseudoscience poly(graph) bulls—.” Another said that “they just use these things to get rid of applicants they don’t really want.”

Prisoners’ Complaints Dismissed Based on CVSA Technique

Rita Lomio, a senior staff attorney at the Prison Law Office, a Berkeley-based nonprofit civil rights law firm, said she first became aware of California prison guards’ use of voice stress analysis in 2017.

As part of her work on Armstrong v. Newsom, a class action lawsuit filed by prisoners with disabilities against California, Lomio’s team spent a year investigating staff abuse at Salinas Valley State Prison. As they combed through prisoners’ complaints that the prison had been dismissed due to lack of evidence, Lomio said in an interview, a pattern emerged: “CVSA was referenced in many of them.”

Lomio said her team identified several red flags in the way prison staff had used voice stress analyzers during misconduct investigations. Prison officials asked only incarcerated people to take the test, not officers accused of wrongdoing; they used it solely on prisoners who said they had witnessed abuse occur, not those who denied seeing anything; and they dismissed abuse complaints outright if a prisoner declined to be interviewed using a CVSA. Yet if the test indicated a prisoner was truthful, Lomio said, officers dismissed the complaint anyway. “It really became a ‘heads I win, tails you lose,’ Orwellian technique,” she said.

The Chronicle requested records from the prison system documenting any investigations that included use of the CVSA and resulted in a finding that guards used excessive force, lied or engaged in sexual misconduct. Corrections department records officials initially denied that the terms “CVSA” or “voice stress”—or similar phrases—appeared in any documents related to such investigations.

When Chronicle reporters presented officials with at least one example that met the criteria, staff members said they would review their response. In March 2024, a department spokesperson said the complaint provided by the Chronicle was the only one like it. The agency is not required to disclose records involving investigations of guard misconduct in which a staff member is cleared. The department also said it could not tell reporters how many exams its staff had administered to prisoners since 2021, saying it “does not maintain its investigative records in a manner that would allow us to readily gather the data.”

At Salinas Valley, Lomio’s team found dozens of instances in 2017 where officers allegedly used voice stress tests to close out accusations of staff misconduct filed by prisoners with disabilities, she told the Chronicle.

In one case, two prisoners “reported being intimidated about the manner in which the CVSA was conducted,” according to a letter Lomio wrote to the prison agency’s Office of Legal Affairs. One prisoner said the voice test operator warned him he would “pursue him to the full extent of his ability” if he lied.

Valuable Technique for Prison Officials

During a separate investigation into the prison’s complaint review process by the California Office of the Inspector General (“OIG”), which monitors the corrections department, an incarcerated person alleged in 2018 that a guard made several derogatory comments about the prisoner’s sexual identity.

A second prisoner corroborated the account, but when investigators insisted the witness undergo a CVSA exam, he declined. The report did not indicate how that case ended. “With this approach to collecting evidence, a prisoner’s statements held no value as evidence unless it was validated by a machine,” OIG inspectors wrote in a 2019 report.

In 2017, after reviewing the Salinas Valley complaints, attorneys at the Prison Law Office wrote to the CDCR that the use of voice stress analysis was part of a pattern of “problematic interview techniques that indicated bias in favor of the staff member and hostility towards the complainant.”

The following year, Kathleen Allison, then-director of the CDCR’s Division of Adult Institutions, directed her staff to stop using CVSA exams when investigating prisoner complaints of staff misconduct, according to a copy of Allison’s memo. The CDCR began changing who investigates guard misconduct claims in 2022, in response to lawsuits filed by prisoners’ rights attorneys. As a result, all complaints of staff misconduct—including those filed by prisoners—are screened by the department’s Office of Internal Affairs. If it is a complaint of serious staff misconduct, it is investigated further by internal affairs instead of prison staff.

Internal Affairs staff remained authorized to use the CVSA in staff misconduct investigations until last year, according to official guidance authored by the CDCR to its Office of Internal Affairs division and released to the Chronicle by the prison agency as part of a public records request.

The office has administered three CVSA exams since 2021 and has three certified CVSA examiners on staff, according to information provided by the corrections department in response to a public records request. Prison officials did not share additional details about the role the exams played in their investigations nor what the investigations concerned. The department also used voice stress analyzers on job applicants for at least seven years. From 2021 to early 2023, when they discontinued the test as part of the hiring process, prison staff administered 4,706 CVSA exams for this purpose.

As of 2023, new regulations allowed prison officials to use CVSA only in threat assessments. When the CDCR passed these regulations, one public commenter asked why prisons were using the technology at all, given that its findings were “not admissible in court due to significant doubts about its ultimate accuracy.” Officials replied that while the results were not admissible, they still thought the tool was “useful” and would be voluntary for prisoners, something Xjimenez also stressed in her communications with the Chronicle.

One reason that law enforcement might continue to use the CVSA despite research debunking it, Hartwig said, is that officers may believe people will be more likely to tell the truth with a perceived lie detection device present. While there is some evidence to back up this idea, it’s not strong enough to justify departments’ use of the tool, she said. “Does it increase honesty? Yes,” Hartwig said. “How much? Not enough to warrant its use.”

Prisoners Unaware of CVSA Trickery

Guards claimed Whitall had struck one of them in the hand and “reddened” it with his cane, prompting the guards to use force against Whitall. But the photos showed the guard’s hand appeared unharmed.

In a series of interviews from prison, Whitall confirmed he wasn’t forced to take the exam. But he also said he was not informed of its lack of scientific validity before consenting. The episode began on Feb. 28, 2017. According to guard reports, Whitall was placed in a holding cell for being “disruptive” in the dining hall. When several guards went to release him, they said, Whitall suddenly swung his cane over his shoulder, striking an officer on his left shoulder and right hand. According to the report, it took three guards to wrestle Whitall to the ground, where they restrained him in handcuffs and chains.

Whitall denied hitting the officer. In legal documents and multiple prison phone interviews with the Chronicle, he said he had requested a replacement splint for his sprained finger, and in response, guards placed him in the holding cage for a strip search. Fifteen minutes after the search, he said, he began arguing with a guard over whether he had used the proper channels to request help for his finger sprain. Suddenly, Whitall said, four guards formed a semicircle around the cell. “I knew something was gonna happen,” he said.

Whitall has Meniere’s disease, an inner ear disorder that causes vertigo. As the guards opened the cell and he walked out, he said he decided to pretend he was having an episode. He fell to the floor and laid still. An officer, Whitall said, warned him that if he did not get up, the officer would accuse him of attacking one of the guards.

Whitall said he stayed down, hoping to calm the situation, but the guards began kneeing and striking him in the face and around his head. One of them said, “Stop resisting.” Whitall said he heard an officer reporting over the radio that a “battery on a peace officer” had occurred.

Medical staff in the prison’s health unit documented injuries on Whitall, including a concussion and cuts and bruises around his eyes, along with the finger sprain that he said had started the entire episode. But only Whitall was punished. He spent four months in solitary confinement and lost his phone and other privileges.

Whitall shared his story with Prison Law Office attorneys, who raised it with prison staff. That July, five months after the alleged attack, the lieutenant in charge of investigating Whitall’s complaint concluded that Whitall’s injuries were not consistent with the guards’ story. The investigating officer noted that, according to medical documents from 2016, Whitall had “bone-deep pain” and arthritis in both arms, which would have made it difficult for him to swing his cane in the manner described by the guards.

A photo spread, the same photos taken immediately after the incident and obtained by the Chronicle, showed a close-up of the guard’s hand—the one he’d claimed Whitall struck that turned “red” as result of being hit with Whittall’s cane—showed an ordinary, unblemished hand, with no visible discoloration or other injuries. The photos of Whitall, however, showed how his face was bruised and swollen.

Officer Suggested Whitall Take CVSA Test

In September 2017, certified examiner Lt. D. Villegas asked Whitall six questions using the voice stress analyzer, and Whitall answered the first five without a problem. But on the sixth—“Did you batter the staff with your cane?”—Whitall’s vocal patterns indicated “deception,” Villegas wrote in a memo, “which brings his reliability into question.”

On the form Whitall signed agreeing to the CVSA, one paragraph reads: “The CVSA examination is completely optional and would have no effect on the resource team conducting a thorough inquiry into the allegation.” But because of Villegas’ memo, investigators closed their inquiry into Whitall’s assault claims. The following February, the prison system also denied Whitall’s appeal to reopen the investigation, finding that his “allegations were appropriately reviewed and evaluated by administrative staff.” Seven years later, Whitall said he remained haunted by the violence he experienced and the way prison staff wielded the CVSA against him.

After the exam allegedly indicated Whitall’s deceptiveness, he recalled the guards involved in the incident bullied him for months. One time, one of the officers grabbed Whitall’s apple from his lunch tray, threw it onto the floor, and then slammed his head into a door, he wrote in court documents.

Whitall said he still avoids the gym where the events took place and steers clear of guards standing in groups. When he has to recall the incident in preparation for his upcoming civil rights trial against the prison, he has to take frequent breaks. The events play back in a “loop,” he added. “I think about this thing, and it still impacts me somehow…. You’d think I’d be over it by now, but I’m not.”

CVSA Intelligence Investigations

Voice recognition has started to feature prominently in intelligence investigations. Examples abound. When the Islamic State of Iraq and Syria (“ISIS”) released the video of journalist James Foley being beheaded, experts from all over the world tried to identify the masked terrorist known as Jihadi John by analyzing the sound of his voice.

Documents disclosed by Edward Snowden revealed that the U.S. National Security Agency has analyzed and extracted the content of millions of phone conversations. Call centers at banks are using voice biometrics to authenticate users and to identify potential fraud.

But is the science behind voice identification sound? Several articles in the scientific literature have warned about the quality of one of its main applications—forensic phonetic expertise in courts. There are at least two dozen judicial cases from around the world in which forensic phonetics played a controversial role. Recent figures published by INTERPOL, the international organization that represents the police forces of 190 countries, indicate that half of forensic experts still use audio techniques that have been openly discredited.

For years, movies and television series like CSI paint an unrealistic picture of the “science of voices.” In the 1994 movie Clear and Present Danger, an expert listens to a brief recorded utterance and declares that the speaker is “Cuban, aged 35 to 45, educated in the eastern United States.” The recording is then fed into a supercomputer that matches the voice to that of a suspect, concluding that the probability of correct identification “is 90.1 percent.”

This sequence sums up a good number of misimpressions about forensic phonetics, which have led to errors in real-life justice. Indeed, that movie scene exemplifies the so-called “CSI effect”—the “phenomenon in which judges hold unrealistic expectations of the capabilities of forensic science,” says Juana Gil Fernandez, a forensic speech scientist at the Consejo Superior de Investigaciones Científicas (Superior Council of Scientific Investigations) in Madrid, Spain.

Acoustical Society

In 1997, the French Acoustical Society issued a public request to end the use of forensic voice science in the courtroom. The request was a response to the case of Jerome Prieto, a man who spent 10 months in prison because of a controversial police investigation that erroneously identified Prieto’s voice in a phone call claiming credit for a car bombing. There are plenty of troubling examples of dubious forensics and downright judicial errors, which have been documented by Hearing Voices, a science journalism project on forensic science carried out in 2015 and 2016.

The exact number of voice investigations conducted annually is difficult to track since there is no centralized database tracking such activities. However, experts in Italy and the United Kingdom suggest there are hundreds of these investigations carried out each year in their respective countries.

Typically, a voice investigation involves several core tasks, which may include transcribing recorded speech, comparing the voice of a person under suspicion with an intercepted voice, presenting the suspect’s voice in a lineup alongside other voices, analyzing dialect or language patterns to identify a speaker, interpreting background noises, and assessing the authenticity of recordings.

The recorded fragments subject to analysis can be phone conversations, voice mail, ransom demands, hoax calls, and calls to emergency or police numbers. One of the main hurdles voice analysts have to face is the poor quality of recorded fragments. “The telephone signal does not carry enough information to allow for fine-grained distinctions of speech sounds. You would need a band twice as broad to tell certain consonants apart, such as f and s or m and n,” said Andrea Paoloni, a researcher at the Ugo Bordoni Foundation and the foremost forensic phonetician in Italy until his death in November 2015.

To make things worse, recorded messages are often noisy and short and can be years or even decades old. In some cases, simulating the context of a phone call can be particularly challenging. Imagine recreating a call placed in a crowded movie theater using an old cellphone or one made by an obscure foreign brand.

In a scholarly article from 1994 published in the Proceedings of the ESCA Workshop on Automatic Speaker Recognition, Identification, and Verification, Hermann Künzel, an established authority in the field, estimated that approximately 20% of the audio fragments analyzed by the German federal police consisted of a mere 20 seconds of usable voice data.

George Zimmerman

Despite these challenges, several forensic professionals demonstrate efforts to engage with and scrutinize audio samples of notably poor quality. In a nationally high-profile case involving George Zimmerman, who fatally shot Trayvon Martin in 2012, a prominent expert showcased the limited extent of the audio samples to determine whether the screams on the audio were those of Martin or Zimmerman. This expert claimed they could extract a distinct voice profile from the audio recording and provide interpretations of the screams discernible in the background of the emergency call made by the neighbor.

These errors are not isolated exceptions. A survey published in June 2016 in the journal Forensic Science International by INTERPOL showed that nearly half of the respondents (21 out of 44)—belonging to police forces from all over the world—employ techniques that have long been known to sit on shaky scientific grounds. One example is the simplest and oldest voice recognition method: unaided listening, leading to subjective judgment by a person with a “trained ear” or even to the opinion of victims and witnesses.

In 1992, Guy Paul Morin, a Canadian, was sentenced to life in prison for the rape and murder of a nine-year-old girl. In addition to other evidence, the victim’s mother said she had recognized Morin’s voice. Three years later, a DNA test exonerated Morin as the murderer. This kind of mistake is not surprising. In a study published in Forensic Linguistics in 2000, a group of volunteers who knew each other listened to anonymous recordings of the voices of various members of the group. The rate of recognition was far from perfect, with a volunteer failing to recognize even his own voice.

This does not imply, however, that automated methods are always more accurate than the human ear. Actually, the first instrumental technique used in forensic phonetics has been denied any scientific basis for a number of years, even though some of its variations are still in use, according to the INTERPOL report. We are referring to voice printing, or spectrogram matching, in which a human observer compares the spectrograms of a word pronounced by the suspect with the same word pronounced by an intercepted speaker. A spectrogram is a graphic representation of the frequencies of the voice spectrum, as they change over time while a word or sound is produced.

Voice printing gained notoriety with the 1962 publication of a paper by Lawrence G. Kersta, a scientist at Bell Labs, in the journal Nature. But in 1979, a report by the National Science Foundation declared that voiceprints had no scientific basis. The authors wrote that spectrograms are not very good at differentiating speakers, and they are too variable.

Spectrogram matching is a hoax, pure and simple.

“Comparing images is just as subjective as comparing sounds,” said Paoloni. Nevertheless, the technique still enjoys a lot of credibility. In 2001, after DNA testing, David Shawn Pope was acquitted of aggravated sexual assault after spending 15 years in a state prison. Pope’s conviction was partly based on voiceprint analysis.

911 Calls

Tracy Harpster, a former deputy police chief from suburban Dayton, Ohio, embarked on a mission to gain national recognition for his revolutionary business—a method capable of identifying whether 911 callers are guilty of the crimes they report. “I know what a guilty father, mother, or boyfriend sounds like,” Harpster boasted, according to Forensic Magazine. Harpster insists that police officers and prosecutors nationwide can master these skills as well.

For instance, Harpster stated that through precise linguistic analysis, law enforcement officials and prosecutors can uncover guilt by examining the norm: crucial aspects of callers’ speech patterns, including tone of voice, pauses, word choice, and grammar. He firmly believes that even a seemingly innocuous word like “hi,” “please,” or “somebody,” when stripped of context, can reveal a murderer on the other end of the line. Researchers who have tried to corroborate Harpster’s claims have failed thus far. The experts most familiar with his work warn that it shouldn’t be used to lock people up.

When the FBI’s Behavioral Analysis Unit investigated whether the voice analysis methods merited closer attention to build criminal cases against suspects, they tested Harpster’s guilty indicators against a sample of emergency calls, the majority from military bases to replicate what they called “groundbreaking 911 call analysis research.”

Unsatisfied with the results, the agents warned others against using such methods to bring criminal charges. The indicators were so inconsistent, ProPublica reported, that many calls went into the “opposite direction of what was previously found.” What should have been a wake-up call by judges and prosecutors who favor using voice analysis to indicate a person’s guilt only serves to fire them up to keep using the discredited technique.

Many prosecutors in various states know about the controversial nature of Harpster’s methods being utilized and that they lack scientific credibility. Despite this awareness, there are instances where some prosecutors continue to endorse these techniques and utilize 911 call analysis as a crucial tool in securing convictions against people whose guilt was highly questionable.

Russell Faria

Lincoln County, Mo., prosecutor Leah Askey wrote Tracy Harpster a complimentary email outlining how she bypassed the legal rules to capitalize on his voice analysis methods against unsuspecting defendants. “Of course, this line of research is not ‘recognized’ as a science in our state,” Askey wrote, explaining how she finagled through hearings required for the method’s legitimacy. She said she disguised 911 call analysis in court by “getting creative … without calling it ‘science.’”

Excited to use Harpster’s voice techniques, prosecutor Askey unleashed the controversial methods in the case of Russell Faria, a man wrongfully convicted of murdering his wife Betsy Faria. She was stabbed 55 times. During the trial, Askey introduced a recording of Faria’s distressed 911 call to the jury, including testimony from a dispatch supervisor who suggested the call was fabricated. Despite objections from the defense attorney, the judge allowed this unreliable testimony into evidence.

A Jury Convicted Russell Faria Without Substantial Evidence

The prosecution’s entire case was basically a sham. Police found no blood on Faria. Also, Faria’s cellphone pretty much confirmed his alibi, i.e., he was nowhere near the murder scene. And four different witnesses testified that Faria was with them on the night of the murder—December 27, 2011. Prosecutors never pinned down a time of death; however, they appeared to suggest Faria killed his wife right before calling 911.

Even this unproven theory dramatically changed when prosecutor Leah Chaney sent shockwaves through the courtroom after she had the audacity to accuse four alibi witnesses of conspiring with Russell to murder Betsy Faria. Similar to a lion ambushing its prey, she told jurors that four different people (defense witnesses), not counting the defendant, had cooked up a wicked plot and then waited until the right time to strike. Defense attorney Joel Schwartz leapt to his feet to object. He explained later, “I was outraged. These were four innocent people who were not guilty of anything, they were accused of murder in that courtroom…. Ridiculous, absolutely ridiculous. There’s not one shred of evidence, not one,” Schwartz told a Fox reporter. Subsequently, Faria was found guilty and handed a life sentence on November 21, 2013.

After Faria successfully appealed his case, Chaney refused to let the man walk free. She pursued another prosecution against Faria in 2015. Chaney insisted on having her supervisor testify about the reasons she believed Faria was guilty, citing his word choice and demeanor during the 911 call. The supervisor emphasized that it was Harpster’s “analytical class” that equipped her to critically evaluate calls and anticipate their outcomes. Chaney tried like hell to pull the same voice analysis stunt, yet the dog didn’t hunt. The scheme backfired and forced the judge to intervene, cutting the testimony short, and Faria was finally acquitted in November 2015.

Before the retrial, Faria’s defense team discovered more than 100 photographs of the crime scene taken by police that had not been disclosed to the defense. The newly discovered photos showed no evidence someone cleaned up blood at Faria’s home after the murder, as testified to by a detective during Faria’s first trial. The lying detective said no photos existed because his camera malfunctioned and could not take pictures.

Real Killer Identified

The real murderer of Russell Faria’s wife was identified as Pamela Hupp. Betsy Faria had named her friend Pamela as the sole beneficiary of a $150,000 life insurance policy four days before Pamela murdered Betsy. “I believe her motivation was simple, it was greed,” said then-Lincoln County prosecutor Mike Woods. Hupp’s testimony contributed to Faria’s conviction, all the while knowing that she was the real killer.

Hupp’s involvement in the murder of Louis Gumpenberger in 2016 in O’Fallon, Missouri, contributed to Russell Faria’s attorney convincing an appeals court to overturn Faria’s conviction, along with the dubious voice analysis used during Faria’s trial. Hupp is serving life in prison for Mr. Gumpenberger’s murder, and she is scheduled to face trial in September 2026 for Betsy Faria’s murder.

Russell Faria spent three-and-a-half years in prison for a murder he did not commit. Undeterred by the outrageous series of events, Harpster sought to leverage the episode to his benefit, looking for fresh customers to market himself to and include it in an upcoming book chapter. When asking Chaney for her endorsement, he stated, “We don’t have to say (Russell Faria’s conviction) was overturned.”

How Police Tried to Frame

Kathy Carpenter for Murder

Based on 911 Call

On February 26, 2014, the nighttime was chilly and clear when Kathy Carpenter hurried from a remote residence nestled in the Colorado’s Rocky Mountains towards the police station located in downtown Aspen.

Gripping the wheel firmly with one hand and her cellphone with the other, Carpenter relayed a distressing situation to a 911 dispatcher amid sobs. She described the grim discovery she had made, stating, “Ok my, my, my friend had a…. I found my friend in the closet, and she’s dead,” Carpenter told a 911 dispatcher between sobs. The victim, Nancy Pfister, a prominent ski resort heiress and benefactor, had met a tragic end, having been fatally attacked.

In the Colorado Bureau of Investigation (“CBI”) report, agent Kirby Lewis documented that “Carpenter requested assistance but was interrupted and hesitated to provide her address immediately upon the dispatcher’s inquiry. She veered off-topic by mentioning Pfister’s dog while discussing the presence of a defibrillator in the house. During this exchange, there was a noticeable pause before she responded, ‘Is there what?’”

Lewis identified 39 indications of guilt and no signs pointing to innocence. As a result, Carpenter was taken into custody eight days later, with her mugshot appearing in various media outlets. Subsequently, she endured three months of incarceration until another person confessed to the crime. The repercussions of such situations are profound, impacting even those who are not convicted.

Many people like Carpenter face unrelenting scorn and ridicule and even criminal prosecution from the misinterpretation of their phone conversations. Reflecting on her experience, Carpenter expressed how her life was irrevocably altered by the incident. She lost her job, depleted her savings, and lost her residence, leading to recovery agents repossessing her vehicle. Burdened with all the daily pressure of being a suspected murderer, she was diagnosed with post-traumatic stress disorder, forcing her to relocate and adopt a discreet lifestyle.

Despite her innocence, the stigma persists, with others wrongly labeling her as a murderer. In her words, she yearns for seclusion and anonymity to escape the relentless scrutiny.

After prosecutors dismissed all of the charges against Carpenter, Greg Greer, the Glenwood lawyer who represented her, along with attorney Kathleen Lord of Denver, played for reporters the 911 call Carpenter made on February 26, 2017, and they displayed crime scene photos. Minutes earlier, she had used a key to open a locked closet that held Pfister’s body, put there by the killer. Statements she made shortly afterwards to the 911 dispatcher that convinced CBI investigator Lewis of her guilt, attorney Greer said.

According to the Aspen Daily News, CBI agent Lewis, the agency’s head of off-site investigations, and Lisa Miller, an investigator with the district attorney’s office, repeatedly questioned her about the 911 call, Greer said. But through 20 hours of interviews, Carpenter never wavered from her story, he said.

The 911 call had earlier been sent to Lewis, who listened to it and sent back a transcript that everybody used, and [he] weighed in and said, ‘After listening to this 911 call, Kathy Carpenter is guilty,” Lewis declared.

Agent Lewis Changed Her ‘Words’ on 911 Recording

The transcript of the recording was attached to the arrest affidavit. An erroneous play on words about blood resulted in Carpenter’s incarceration for 96 days without bond. But there was a major problem, Greer said.

The transcript says Carpenter told the 911 operator that she had seen blood on Pfister’s forehead. Investigators then told Carpenter, “If you see Nancy Pfister’s forehead, then you saw more than you said you saw in the closet,” Greer said. The problem is she never said that she saw her forehead. Instead, what Carpenter had actually said on the 911 call was that there was blood on the bed’s headboard, Greer said, again playing the recording. “What is your friend wrapped in?” the dispatcher asks her. “I, I, I don’t know,” Carpenter says, weeping. “I don’t know, saw blood on the headboard!” In the CBI transcript, “headboard” was changed to “forehead.” Greer said confidently, “She said ‘headboard’…. Believe me, I’ve listened to this a hundred times.”

So, who murdered Pfister? In October 2014, Trey and Nancy Styler, Pfister’s former roommates, were charged with her murder. Trey confessed to Pfister’s murder for life imprisonment in exchange for the charges being dropped against his wife. What was the motive? Get ready for the crazy zone. Trey claimed he murdered Pfister as punishment for adding interest rates to the couple’s rent while they lived in her home.

Riley Spitler’s Story

Riley Spitler, then 16, made an errant decision. He thought a gun was empty when he jokingly pointed the weapon at his 20-year-old brother and pulled the trigger, he later told the police. Unfortunately, the bullet struck Patrick in the heart, killing him instantly on December 6, 2014.

Riley’s 911 call for help was almost inaudible as he wailed constantly about what happened. “I think I killed him…. Oh my God my life is over.” Riley, horrified to the point he was unable to remember how to open the glass front door from the inside, cracked the glass with his bare hands.

The brothers’ grieving parents rushed to the hospital and immediately discovered their other son Patrick had died. “I should be dead,” Riley wailed. “He should be living.”

Michigan police charged the young man with murder, not manslaughter. Manslaughter or negligent homicide would have significantly lowed the penalty for Riley. But the shot callers decided he should face a murder charge. During his trial in 2016, prosecutors characterize him as a drug-dealing, gun-toting teen who resented his brother Patrick’s popularity to the point he murdered him. Lies by the young man began with the 911 call, prosecutors alleged in court.

Enter Det. Joseph Merritt, who had taken Tracy Harpster’s course that magically empowers investigators to determine when a guilty person is on the phone with 911. Merritt testified that after taking Harpster’s course, he applied the voice analysis method in four out of every five cases. In all, Merritt said, the technique had been used by him at least 100 times.

Riley’s attorneys insisted from day one the shooting was an accident. Determined to convince the judge of that fact, his attorneys vehemently objected to Merritt’s work in the linguistic field because it had not been proven scientifically. But Prosecutors argued that Merritt should testify as an expert about the indicators of guilt he identified in Riley’s 911 call on that fateful night.

For instance, when the dispatcher asked, “What happened that he got shot?” Riley responded, “What hap— What do you mean?” This, Merritt wrote in an email to prosecutors, was an attempt to resist the dispatcher. Saying things like “my life is over” showed that he was concerned with himself and not his brother. “Very ‘me’ focused,” Merritt wrote. Riley said again and again that he thought his brother was dead. This is considered to be another guilty indicator known as “acceptance of death,” according to Merritt.

In most states, trial judges are responsible for making sure expert testimony has a reliable foundation. Michigan’s rules of evidence on the admissibility of expert witness testimony are based on the Daubert standard, which was named after the U.S. Supreme Court decision Daubert v. Merrell Dow Pharmaceuticals Inc., 509 U.S. 579 (1993). The Daubert standard is a systematic framework for trial courts to use in accessing the reliability and relevance of expert witness testimony before it is presented to the jury.

Prosecutors in Lyon County, Nev., once wanted a detective trained by Harpster to testify about the 911 call analysis used to incriminate a man accused of shooting his wife. The judge wouldn’t allow it. “I don’t see any reliable methodology or science,” he said. “I’m not going to let you say that it’s more likely that someone who is guilty or innocent or is more suspicious or less suspicious.”

The judge in Riley’s case, a former prosecutor named John McBain, was more credulous. ProPublica reporter Brett Murphy wrote in his story about the junk science behind 911 call analysis. McBain let Merritt testify as an expert witness and accepted the reliability of 911 call analysis on its face. He maintained that Harpster’s course is recognized by the Michigan Commission on Law Enforcement Standards. This, McBain said, was proof of 911 call analysis’ value.

Joe Kempa, the commission’s acting deputy executive director, clarified that the commission does not technically certify or accredit courses—it just funds them. There is little review of the curriculum because the commission approves up to 10 courses a day from too many fields to count, he said. Accrediting each would be too hard. As long as a course is “in the genre of policing” without posing an obvious health threat, it will likely be approved for state funds, according to Kempa.

A jury convicted Riley of second-degree murder and sentenced him to 46 years in a Michigan state prison. “This case is about junk science,” his attorney argued, “used to convict a 16-year-old of murder.” In 2017, a Michigan appellate court reversed his conviction, specifically citing the fact the trial court improperly admitted testimony from a detective presented as an expert in “linguistic statement analysis without properly determining that his testimony was based upon “reliable principles and methods,” as required by MRE 702. In July 2019, Riley was sentenced to 4-15 years in prison on a single count of manslaughter and was release in 2020.

Different Interpreted Sounds

The scientific community has rejected certain voice analysis methods but has yet to establish unanimous agreement on the most reliable approach to voice identification. According to Juana Gil Fernandez, there exist two distinct viewpoints on this matter. “Linguists support the use of semi-automatic techniques that combine computerized analysis and human interpretation, while engineers attribute more importance to automatic systems.”

Acoustic Phonetic

Semi-automatic techniques are still the most widely used. These methods are called acoustic-phonetic” because they combine measurements obtained by listening (acoustic) with the output of automated sound analysis (phonetics). Experts who rely on acoustic-phonetic methods usually start by listening to the recording and transcribing it into phonetic transcription. They then identify a number of features of the voice signal. The high-level features are linguistic, e.g., a speaker’s choice of words (lexicon), sentence structure (syntax), the use of filler words such as “um” or “like,” and speech difficulties such as stuttering.

The sum of these characteristics is the idiolect—a person’s specific, individual way of speaking. Other high-level qualities are the so-called suprasegmental features such as voice quality, intonation, number of syllables per second, and so on.

Lower-level characteristics, or segmental features, mostly reflect voice physiology and are better measured with specific software. One basic feature is the fundamental frequency. If the voice signal is divided into segments a few milliseconds long, each segment will contain a vibration with an almost perfectly periodic waveform.

The frequency of this vibration is the fundamental frequency, which corresponds to the vibration frequency of the vocal folds, and contributes to what we perceive as the timbre or tone of a specific voice. The average fundamental frequency of an adult male is about 100 hertz and that of an adult female is about 200 hertz.

It can be hard to use this feature to pin down a speaker. On the one hand, it varies very little between different speakers talking in the same context. On the other hand, the fundamental frequency of the same speaker changes dramatically when he or she is angry or shouting to be heard over a bad phone connection.

Other segmental features commonly measured are vowel formants. When we produce a vowel, the vocal tract (throat and oral cavity) behaves like a system of moving pipes with specific resonances. The frequencies of these resonances (called the formants) can be plotted in a graph that represents a specific “vowel space” for each speaker, and the graph can be compared to that of other speakers.

Despite its widespread use, the acoustic-phonetic method presents several challenges. Its semi-automated nature introduces an element of subjective interpretation, leading to potential disparities in conclusions among experts using similar techniques.

Furthermore, there is a notable lack of comprehensive data regarding the prevalence and variation of phonetic characteristics beyond fundamental frequency within the general population. Consequently, some experts contend that it is improbable to definitively ascertain a speaker’s identity solely through voice analysis. At best, experts can determine the compatibility between two voices.

False Positives

During the 1990s, a novel system emerged that aimed to reduce human subjectivity—automatic speaker recognition (“ASR”). With ASR, recordings undergo processing through specialized software to extract and categorize signal features, which are then compared against a voice database. The underlying algorithms typically partition the signal into short timeframes to extract frequency spectra, which are visual or numerical representations of a signal’s distribution of power across different frequencies.

These spectra are subjected to mathematical transformations to derive cepstral coefficients, which are numerical representations of a signal’s short-term power spectrum and are especially useful in speech processing to capture vocal tract characteristics. Essentially, cepstral coefficients serve as a model for the speaker’s vocal tract shape. “What we do is very different from what linguists do,” says Antonio Moreno, vice president of Agnitio, the Spanish company that produces Batvox, the most widely used ASR system, according to INTERPOL.

“Our system is much more precise, is measurable, and can be reproduced: two different operators will get the same result from the system.”

However, linguists disagree. “The positive side of ARS is that it needs less human input…. The negative side is that cepstral coefficients reflect the geometry of the human vocal tract, but we are not too different from one another. Hence, the system tends to make false hits,” says Peter French with the University of York, president of the International Association for Forensic Phonetics and Acoustics, and director of J.P. French Associates, the main forensic phonetics company in the U.K.

“I believe that automatic systems should be combined with human intervention,” French said. Other experts are blunter in their criticism. “At the moment, ASR does not have a theoretical basis strong enough to justify its use in real-life cases,” stated Sylvia Moosmüller, an acoustic scientist at the Austrian Academy of Sciences. One of the main reasons for skepticism is the fact that most ASR algorithms are trained and tested on a voice database from the U.S. National Institute of Standards and Technology (“NIST”).

The database is an international standard, but it includes only studio recordings of voices that fail to approximate the complexity of real life, with speakers using different languages, communication styles, technological channels, and so on. “In fact, what the program is modeling is not a voice, but a session, made up of voice, communication channel, and other variables,” Moreno said.

“In the NIST database, the same speaker is recorded through many different channels, and many different speakers are recorded through the same channel,” Moreno explained. “Compensation techniques are tested on this dataset, and allow us to disentangle the speaker’s characteristics from that of the session.” In essence, a system trained using this approach ought to possess the capability to discern the identical speaker across two distinct telephone conversations—one conducted through a landline and the other through a cellular device.

Moreno believes that automatic speaker identification is more than ready to produce valid results and improve the reliability of forensic evaluations. However, he admits that ASR “is one of the many techniques available to experts, and the techniques complement each other: the more advanced labs have interdisciplinary groups.”

The primary issue surrounding ASR may not necessarily stem from inherent flaws within the software but rather from the capabilities and proficiency of the individual utilizing it. “It takes a voice scientist. You cannot just place any operator in front of a computer. These programs are like airplanes: you can buy a plane in one day, but you cannot learn how to fly in three weeks,” says Didier Meuwly, of the Netherlands Forensic Institute.

Geoffrey Stewart Morrison, a linguistics professor at the University of Alberta in Canada, observes that despite the intricate nature of forensic voice matching software, companies frequently promote it broadly, often targeting individuals without specialized knowledge in the field. He emphasizes that Agnitio offers an extensive three-year training initiative; however, the completion rate among the numerous users of Batvox remains notably low, estimated at 20 to 25 percent. It is important to mention that the Batvox tool entails a substantial financial commitment, with costs reaching up to 100,000 Euros.

Modern Statistical Analyses Needed

Irrespective of the analysis method selected, forensic phonetics faces a core scientific hurdle. Unlike more progressive fields such as forensic DNA testing that have integrated Bayesian statistics (a statistical method that focuses on updating beliefs based on new evidence), forensic phonetics has not experienced a notable evolution in statistical data analysis methodologies. The significance of this issue is exemplified by Morrison, a leading proponent of Bayesian statistics in forensic phonetics and a contributor to the INTERPOL study.

“To illustrate, consider a scenario where a size 9 shoe print is discovered at a crime scene, and a suspect owns shoes of the same size. In another instance, a size 15 shoe print is found, coinciding with the suspect’s shoe size. In the second case, the evidence against the suspect is stronger, because a size 15 is less common than a size 9,” says Morrison. In other words, it’s not enough to measure the similarity between two shoe prints (or two voices or two DNA samples). Analysts also have to take into account how common those footprints (or voices or DNA) are.

For voice, the problem can be framed as follows: If a suspect and a criminal are the same person, how likely is the similarity between the two voices? And if they are not the same person, how likely is the similarity? The ratio of these two probabilities is called the likelihood ratio or strength of evidence. The higher the strength of evidence (for example, for voices that are very similar and very atypical), the stronger the evidence.

A higher or lower likelihood ratio can increase or diminish the likelihood of guilt, but the probability is also dependent on other cues and evidence, forensic and otherwise. As is typical of Bayesian statistics, the probability is not calculated once and for all but is constantly adjusted as new evidence is discovered.

In the guidelines for forensic science published in June 2015, the European Network of Forensic Science Institutes recommends the use of a Bayesian framework and especially of the likelihood ratio. However, according to the INTERPOL report, only 18 of the 44 experts surveyed had made the switch.

An important challenge that arises in the implementation of Bayesian statistics is the complexity associated with determining the representativeness of a given voice. This difficulty stems from the absence of established statistical standards regarding the distribution of voice characteristics, making it hard to gauge the typicality of a particular voice.

“If you have a database of two million fingerprints you can be quite confident of the reliability of your estimates, but voice databases are much smaller,” explained Paoloni. For example, the DyViS database used in the U.K. includes 100 male speakers, most of them educated at Cambridge University. Meanwhile, Moreno is certain that some police databases, which are not public, contain thousands of voices, and that some organizations have databases reaching hundreds of thousands of speakers.

“In the era of big data, the most reasonable thing to do would be to set up a corpus with a large amount of data,” modeled on the platforms that provide online services, said Paoloni. Considering the unique nature of Morrison’s approach, the methodology involves gathering audio recordings of individuals from specific demographic groups tailored to the given scenario.

These recordings encompass various characteristics, such as gender, language, dialect, and speaking style variations like tiredness, excitement, or drowsiness. The problem, however, “is that many laboratories say that they don’t have any kind of database,” according to Daniel Ramos, a scientist at the Universidad Autónoma of Madrid who also collaborates with a Spanish police force, the Guardia Civil.

“Our investigation into the current state of forensic phonetics has revealed several limitations in the science of voice identification. We recommend that the results from this field should be approached with extreme caution. Paoloni stated, “In my opinion, no one should be convicted solely based on voice identification. In dubio pro reo—when in doubt, judge in favor of the accused—should definitely carry the day when ASR is used during criminal investigations and prosecutions. The potential for error with voice identification is simply too great for anyone to be found guilty beyond a reasonable doubt on the basis of ASR evidence.

Sources: Richard Leo, Police Interrogation and American Justice; Raymond Whitall story (San Francisco Chronicle June 4, 2024); NITV Federal Services; Berkeley Director of Police Hansel Alejandro Aguilar; ABC News; National Institute for Truth Verification; Professor Maria Hartwig at Professor John Jay College of Criminal Justice; European Network of Forensic Science; Forensic Linguistics; Geoffrey Stewart Morrison, Professor at University of Alberta, Canada; Didier Meuwly, Netherlands Forensic Institute; Lawrence G. Kersta, Journal Nature Forensic Linguistics; Juana Gil Fernandez, Forensic Speech Scientist; and the Forensic Science International by Interpol; Forensic Magazine; ProPublica.

As a digital subscriber to Criminal Legal News, you can access full text and downloads for this and other premium content.

Already a subscriber? Login